The card comes in two sub-variants based on memory, there’s a 16 GB variant with 720 GB/s memory bandwidth and 4 MB 元 cache, and a 12 GB variant with 548 GB/s and 3 MB 元 cache. It offers FP64 (double-precision floating-point) performance of 4.70 TFLOP/s, FP32 (single-precision) performance of 9.30 TFLOP/s, and FP16 performance of 18.7 TFLOP/s, compared to the NVLink variant’s 5.3 TFLOP/s, 10.6 TFLOP/s, and 21 TFLOP/s, respectively. The PCIe variant of the P100 offers slightly lower performance than the NVLink variant, because of lower clock speeds, although the core-configuration of the GP100 silicon remains unchanged. NVIDIA explained a little more about the performance of the new PCI-Express variant of its Tesla P100 HPC Accelerator :

The Tesla P100 HPC has been designed for PCIe multi-slot servers and measures 30 cm long, 2-slot thick, and of standard height, and follows on from the introduction of the Tesla P100 by NVIDIA during April 2016. Looks like we'll have to wait for Computex to hear more about the consumer lines.This week NVIDIA has unveiled a new addition to its range of PCI-Express cards with the unveiling of the Tesla P100 HPC accelerator which was officially unveiled at this year’s International Supercomputing Conference, held in Frankfurt, Germany. Since I guess Tesla is more suitable for server GPGPU computing, I don’t understand why the Quadro GP100 have more FP64 performance than the Tesla P100 (5.2 TFLOPS vs 4.7 TFLOPS), but Quadro is also cheaper. Sadly, Nvidia is not commenting on any future consumer facing products at this time. Hello, I would like to understand the main difference between latest Quadro and Tesla product lines, related to G/P100 and G/V100. That means we're far more likely to see a GP104 chip that skips all the ECC, HBM2, and FP64 stuff and potentially stuffs more FP32 cores into a smaller die than P100. For example, for MILC, a single node with four P100’s will do the work of 10 dual socket CPU nodes while for HOOMD Blue a. A single server node with P100 GPUs can replace up to 20 CPU nodes.

#Tesla p100 fp64 full

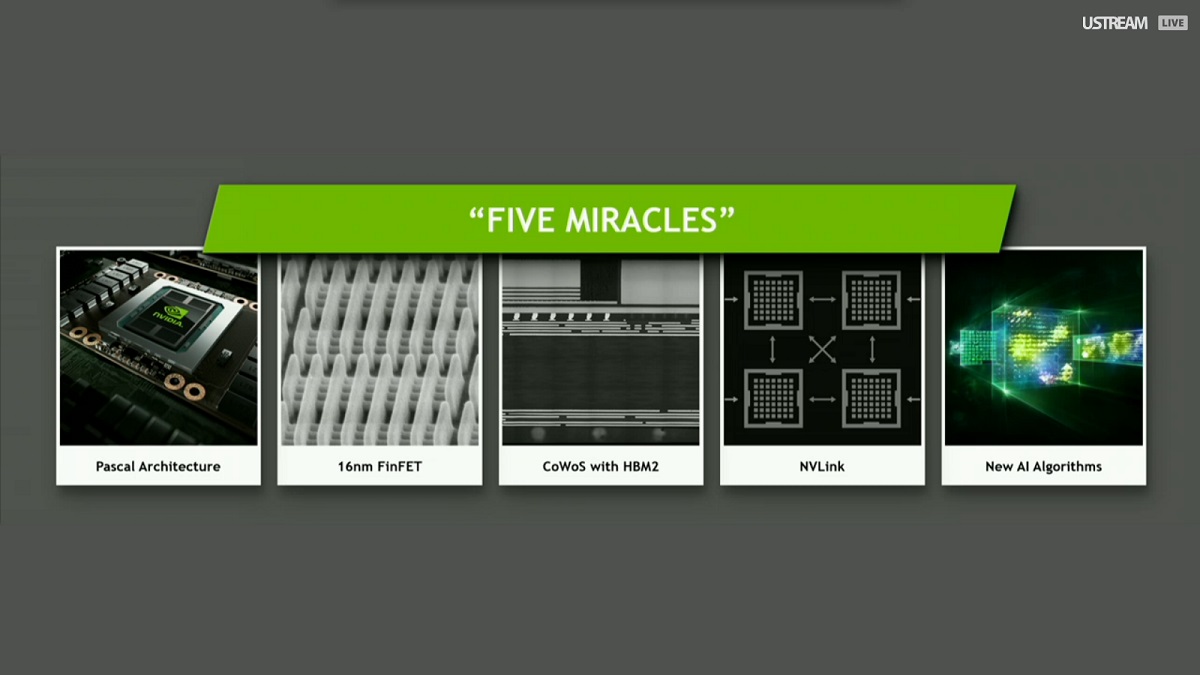

You see, even though Nvidia is spilling the beans on Tesla P100 today-or at least, some of the beans-and the chips are in volume production, Nvidia doesn't plan on full retail availability from OEMs (meaning, servers and workstations that ship with Tesla P100) until Q1'2017, with P100 showing up "cloud first" at an unspecified date. The NVIDIA Tesla P100 dramatically boosts the throughput of your data center with fewer nodes, completing more jobs and improving data center efficiency. At best, I suspect we might see some new variant of Titan based off P100 in the future, but that could be a long way off. What all this means is that P100 may never be utilized in a mainstream consumer device. About 50 of that is FP32 CUDA cores, 25 is FP64 and. Nvidia Details Tesla P100 Pascal GPU Specifications. M40 by comparison had half- and single-precision rates of 6.8 TFLOPS, but double precision rates of just 213 GFLOPS that's because GM200 only included four FP64 cores per SMM, a significant departure from the GK110 Kepler architecture. The P100 also features what Nvidia is calling the '5 miracles'. Raw compute power ends up being 21.2 half-precision FP16 TFLOPS, 10.6 single-precision FP32 TFLOPS, or 5.3 double-precision FP64 TFLOPS. That might sound like only a small step forward, considering the M cores, but clock speeds have improved.

#Tesla p100 fp64 plus

HBM2 also includes "free" ECC protection, which is a plus for professional applications where reliability and accuracy are paramount.Ī fully enabled P100 has 60 SMs, giving a potential 3840 cores, but Tesla P100 disables four SMs to give 3584 total cores. Thousands of High-Performance Computing (HPC) applications are accelerated by NVIDIA Tesla graphics cards in several fields, including. The P100 is the first NVIDIA GPU with a 16nm manufacturing process and Gen2 High Bandwidth Memory (HBM2). This was obviously a critical factor for Tesla cards, considering the older Tesla K40 already had 12GB of memory, and M40 likewise supports 12GB-not to mention the newly released M40 that comes with 24GB of GDDR5. The Tesla P100 12GB PCIe GPU has a full-height form factor and is packed with 3584 NVIDIA CUDA cores. That's all well and good, but perhaps more important than simply providing tons of memory bandwidth, HBM2 significantly increases the amount of memory per HBM stack, with P100 sporting a total of 16GB of VRAM. The net result is four layers of stacked memory running on a 4096-bit bus, only the memory this time is running at 1.4Gbps instead of 1.0Gbps, yielding a total memory bandwidth of 720GB/s. Like Fiji, Nvidia is tapping HBM (High-Bandwidth Memory) for the P100, only they're using HBM2 instead of HBM1. If the above image looks a bit reminiscent of AMD's Fiji processors, there's good reason. Behold, the Nvidia Tesla P100 graphics module, with 150 total billion transistors of performance.

0 kommentar(er)

0 kommentar(er)